We understand that it might be tempting for managing editors at society and institutional journals to opt to stick to what has proved to be working again and again over the decades. “If it ain’t broke, don’t fix it”, right?

Yet, here comes the elephant in the room: science is all about staying ahead of your time and constantly evolving.

Even though there are scientists who easily fall in love with the ink-scented charm of a century-old journal, there are many more who have long turned those down in favour of commercial journals that have never seen the pins of a commercial printing press.

The reasons could be anything from the topic of their manuscripts missing from the otherwise extensive Scope and focus list, to an annoying submission portal that simply fails to deliver their cover letter to the right inbox.

Don’t get us wrong: we do hold dear so many publication titles that have been around throughout our lives and before us. This is exactly why we felt like sharing a list of the most common mistakes – in our experience – that may distance today’s active and prolific scientists from smaller society and institutional journals.

Mistake 1:

Not following the trends in scholarly publishing

Given the high-tech and interconnected society that we are living in, it comes as no surprise that the past few decades have seen as many shifts and turns in how researchers produce, share and reuse their scientific outputs as the previous several generations put together.

From digital-first journals to Open Access by default; from PDF files to HTML articles enriched with relevant hyperlinked content and machine-interpretable publications; from seamlessly integrated preprints platforms to ‘alternative’ scientific publications from across the research process. The room for innovation in the academic publishing world seems as infinite as never before.

On the other hand, endless possibilities call for new requirements, policies and standards, which in turn should be updated, revised and replaced in no less timely manner.

In a bid to bring down barriers to scientific knowledge, we have observed all key stakeholders – including major funders of research – demanding mandatory immediate open access to published research. Initiatives, such as the Plan S by cOAlition S and their call for publishers to communicate their APC policies publicly, for example, are making sure that scholarly publishing is as transparent as possible.

Similarly, the OA Switchboard: a community-driven initiative with the mission to serve as a central information exchange hub between stakeholders about open access publications, makes it easier for both authors of research and journal owners to efficiently report to funders about how their resources are spent.

Today, amongst the key talking points you can find research integrity, including a wide range of internal practices and policies meant to prove the authenticity and trustworthiness of scholarly publishers and – by association – their journals and content. Another thing we will be hearing about more and more often is AI tools and assistants.

Failure to abide by the latest good practices and principles could mean that a journal appears abandoned, predatory or – in the case of legacy journals – possibly hijacked.

While it is tough to stay up to date with the latest trends, subscription to the newsletters and communication channels of the likes of OASPA (have you checked their Open Access journals toolkit yet?), cOAlition S and ISMTE are definitely a good place to start from.

Mistake 2:

Not revisiting journal websites content- and technology-wise

As they learn and research trends in the scholarly publishing world, journal managers need to be ready to carefully consider and apply those novelties that are either mandatory, advisable or useful for their own case.

Additionally, they need to work closely with the editorial and customer support teams to stay alert for any repeated complaints and requests coming from their authors and other journal users.

Today, for example, most online traffic happens on mobile devices, rather than desktop computers. So, is a journal’s website and its article layout (including PDFs) mobile-friendly? On the other hand, has the journal’s website been optimised enough to run smoothly even when working under the pressure of dozens of tabs open in the same browser?

Another area that needs continuous monitoring concerns journal policies and user guidelines. Are those up-to-date? Are they clear, easily accessible and efficiently communicated on the journal website?

From an academic perspective, is the journal’s scope and focus – as described on the website – explicitly mentioning today’s ‘hot’ and emerging areas of research in the field? Keep in mind that these topics do not only make a journal’s About section look attractive to the website visitor. These are the research topics where its potential authors are most likely investing their efforts in and where the funders of research spend their resources, as we speak.

An outdated technological infrastructure might create an impression of a dodgy publication title. Failure to find key information – including a journal’s Open Access policy or an exhaustive list of the research topics considered for publication – could be why potential authors turn down a journal upon their first website visit.

Mistake 3:

Not making the most of journal indexing

To have a journal indexed in as many major and relevant to its scientific field is not only a matter of prestige.

Most importantly, making a journal’s content accessible from multiple sources – each frequented by its own users – naturally increases the likelihood that researchers will discover and later use and cite it in their own works.

Additionally, being able to access the journal from trusted and diligently maintained databases – such as the Directory of Open Access Journals (DOAJ) – is a reassurance for potential authors and readers that the journal is indeed authentic.

Of course, journal indexing is also a constantly evolving ecosystem.

Relatively recent platforms like Altmetric and Dimensions were launched to track ‘alternative’ attention and usage of published research, including mentions on social media, news media and official documents – all of which fall outside the category of academic publications, but, nonetheless have their own remarkable societal impact.

The good news is that many indexers – including Altmetric and Dimensions – are integrated on a publisher-/platform-level, which means that journal managers and owners do not need to do anything to make their content more accessible and transparent, as long as their title is part of a professional scholarly publisher’s or a publishing platform’s portfolio.

However, there are databases like Scopus and Web of Science that demand from journals to submit their own applications. Usually, such application processes demand a considerable amount of manual input and efforts before the necessary steps are completed and the application – approved.

Often, these processes take several years to complete, since journals may fail to cover the eligibility criteria for acceptance. In fact it is not rare for a journal to never qualify. Note that most major indexes impose embargo on journals that have been refused acceptance, so that they may take time to resolve their issues before reapplying a few years later.

Failure to get a journal indexed by key scientific databases negatively impacts the discoverability and citability of its content. Further, it may also harm the reputation and even legitimacy of the title within academia.

Mistake 4:

Not catering for authors’ financial struggles

Funding scarcity and inequalities are a major concern within academia and the scientific world, but even more so in particular branches of science. Moreover, the issue cascades through all actors involved, including funders of research, research institutions, scientists, librarians, scholarly publishers and journal owners.

Unfortunately, it is way too common to have researchers turn away from their favourite journals – including those they have themselves committed to by becoming active editors or reviewers – due to inability to cover even a very reasonable article processing charge.

No less unfortunate is the scenario where a privately working or underfunded scientist opts for a paywalled publication in a subscription or a hybrid journal that has essentially traded the public good for thousands of euro at a time.

On the other hand, scholarly publishing does incur various costs on the journal’s and the publisher’s sides as well, since the publishing and all related activities (e.g. production, indexation, dissemination and advertisement) – necessary for a journal and its content to retain quality, sustainability and relevance in the modern day – all demand a lot of technological resources, manual input and expertise.

A seemingly easy solution adopted by many large institutions is to sign up agreements with a few of the major publishing houses and (a selection of) their top titles. However, such practices only deepen the great divide, while killing off smaller journals that simply cannot handle their own annual costs.

The ideal is to have enough resources provided by the institution or society behind the journal to run their journal(s) under a Diamond Open Access model, where both publishing and accessing publications are free to all by default.

However, most institutions are themselves under substantial financial pressure, and need to work on a tight budget. Further, it is often impossible to estimate the publication volume of a journal within a time frame as short as the next calendar year.

A good solution in cases of limited budgets, is for a journal to work up its own model, where the available institutional resources either go for only a part of the APCs (but still enough to substantially help the authors!); a particular subset of authors (e.g. researchers affiliated with the institution); or, alternatively, finding options to navigate funds from already fully funded authors away to their less privileged peers. In the best-case scenario, any custom operational plan would remain flexible in case of a major change in circumstances.

On top of more or less standard waivers and discounts available to, for example, authors from developing countries (according to the World Bank) and retired scientists, we highly recommend journals to provide additional waivers for additional groups of underprivileged authors, such as unemployed, early career researchers or otherwise marginalised groups (e.g. political immigrants). A good practice we often recommend to client journals is to pledge loyalty to their journal users by regularly providing APC waivers as a recognition for their continuous contributions.

Mistake 5:

Not engaging with the editors

Apart from its name appearing in the most trustworthy databases, a journal catches the eye of new authors thanks to its ambassadors. In a world more or less run by popular influencers, it comes as no surprise that a high-profile scientist who takes a stand either for or against a particular publication title can easily tip the scales for a hesitant colleague.

Now, think about all editors at a journal. Given they have already agreed to commit their time and work to it, they must be already fond of it, don’t they? Then, there are all those experts affiliated with the institution or society behind the journal. Even if not necessarily involved in the editorial work, they must also be fans and friends of the journal by association.

So, here you have a whole community ready and happy to advocate for the journal if given the chance and a simple reminder that a few words of theirs would go a long way. Further, all of them have their own professional fellowship, which often includes dozens of budding early-career researchers who are looking up to them as mentors.

That said, way too often we see good legacy journals that are simply not reaping the benefits of the community they have already created. We cannot stress it enough how crucial it is to regularly invoke that sense of community among journal editors.

At the end of the day, a journal’s management team is in the best position to act as a link between the journal and its editors, as well as the publisher and its marketing team, who should be able to assist any communication efforts with the right platforms, tools and resources.

If a journal is not efficiently tapping into its immediate network of advocates and ambassadors, it does not only miss out on a great opportunity to extend its network of loyal readers and authors. Indeed, it may be even risking losing its ground within its very own affiliates. If it was a good publication source, it would have been supported by at least its in-house team, wouldn’t it?

Mistake 6:

Not having a varied communication strategy

Speaking of awareness – or the lack of it, thereof – we are also sad to see many society and institutional journals overlooking the communication of scientific content to their journal’s immediate audiences, as well as the public at large.

On one hand, there is the issue of other experts duplicating others’ efforts by simply not being aware of their colleagues’ work, since they have not run into that particular study at the time of writing. On the other, there’s the layperson that is way too often falling into the ‘fake news’ trap, all because the relevant science has failed to make it to the appropriate news media channels.

Given the overwhelming volume of scholarly as well as popular science information, it is the primary source of the content that needs to take measures to efficiently communicate it to the interested audiences.

After all, simply having research findings ingested by academic databases is rarely enough to reach scientists in the field who are not actively working at this time, thus not spending enough time querying those aggregates. Similarly, one cannot rely on a layperson to fully comprehend scientific jargon, even if they wish to double-check a random science-y claim on the Internet with its original source.

Alternatively, when scientists stumble across attractive findings while scrolling down on social media in their free time, they might be more likely to think of the same paper next time they are working on their own studies.

Meanwhile, laypeople might be less likely to blindly trust information about the latest medical breakthrough coming from a stranger on the Internet if they had already read about the research behind the story in several trusted news media outlets, who have verified their news stories.

Failure to actively and efficiently communicate content published in the journal using various platforms (e.g. journal newsletters, dedicated social media accounts, press announcements, scientific conferences) and the appropriate for their audiences language might pave the way for large-scale misinformation, in addition to decreased discoverability and citability of published content, and journals at large.

***

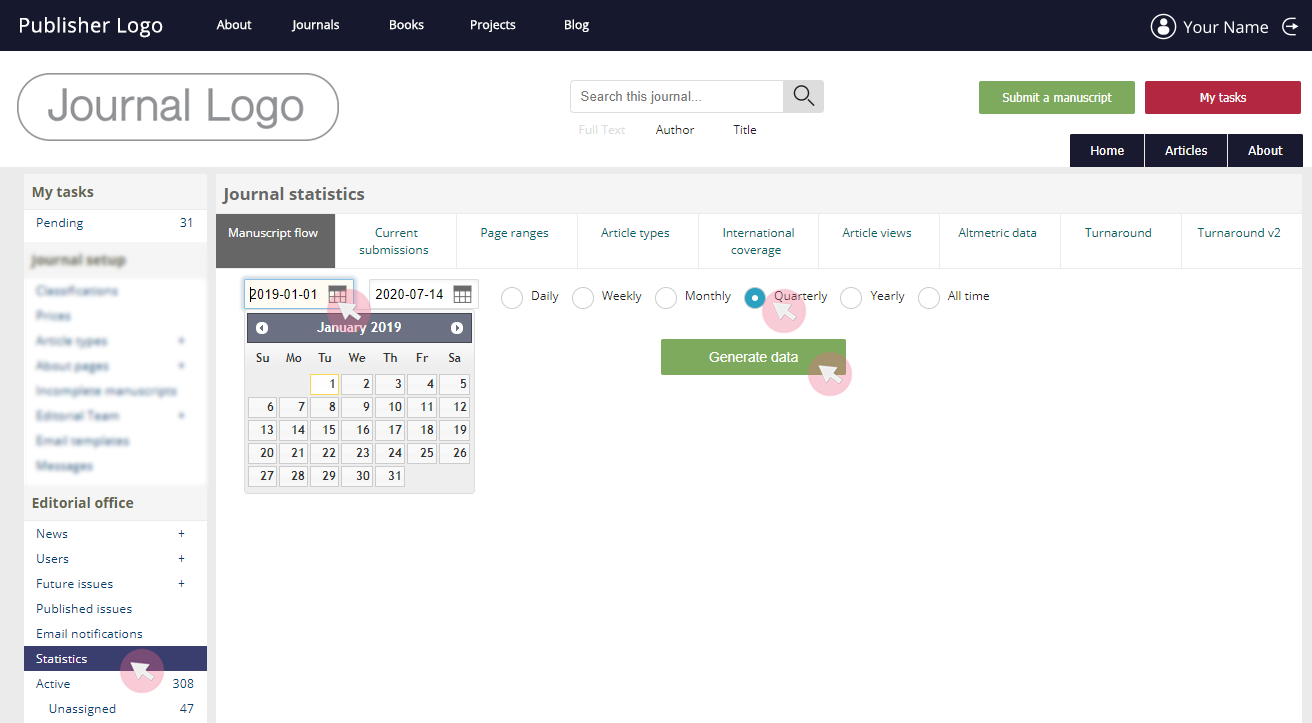

ARPHA Platform was designed to provide a highly flexible, customisable and accessible end-to-end publishing solution specially targeted at smaller journals run by scientific institutions and societies. In ARPHA, client journals and their journal managers will find a complete set of highly automated and manually provided services meant to assist societies and institutions in the production, but also in the management and further development of their journals. We invite you to explore our wide range of services on the ARPHA website.

________

For news from & about ARPHA and the journals using the platform, you can follow us on Twitter and Linkedin.